What the OECD Skills Outlook 2025 means for VET and AI

Skills and competencies have always been central to vocational […]

Generative AI and the Future of K-12 Education – Towards Sustainable and Ethical Innovations to Strengthen Human Agency

Maria Perifanou has announced the Call for Papers for a Special Issue in the Educational Technology Research and Development (ETRD) Journal on Generative AI and the Future of K–12 Education.The issut aims to advance research, theory, and practice on the responsible, sustainable, and ethical use of GenAI in K-12 education, promoting the significance of human agency within GenAI implementations. It invites contributions that explore and discuss the ethical equity and policy dimensions of GenAI adoption in K-12 educational contexts. Submissions may include theoretical reviews discussing frameworks, theoretical and conceptual analyses, ethical implications, empirical studies, and critical reviews. Collectively, these contributions […]

Beyond the Hype: What AI Really Means for Pedagogy and the Role of Teachers and Trainers

There is no shortage of noise about Artificial Intelligence in education. We are bombarded with daily announcements of new tools and bold predictions, swinging from utopian visions of personalized learning to dystopian fears of teacher redundancy. It can be difficult to find a signal in the noise. A a new white paper by Tom Chatfield, “AI and the Future of Pedagogy,” is a welcome intervention. It cuts through the hype and grounds the conversation not on the technology itself, but on the principles of how humans learn. For those of us in vocational education and training (VET) across Europe who […]

DigComp 3.0: A New Milestone for Digital Competence in Europe

The newly published DigComp 3.0 marks an important update […]

AI Ready Schools

Last week we celebrated the kick off of a new AI schools project at our first meeting in Leuven. The project builds on the work we did in AI@School and previous Taccle projects in that we will work with schools, teachers and pupils to co-create curriculum linked resources. AI Ready is an Erasmus+ initiative designed to prepare secondary schools for the digital future by equipping the educational community with the knowledge and skills to understand and use Artificial Intelligence (AI) effectively. Being AI Ready isn’t just about knowing AI—it’s about making informed decisions, understanding its potential, and using it ethically […]

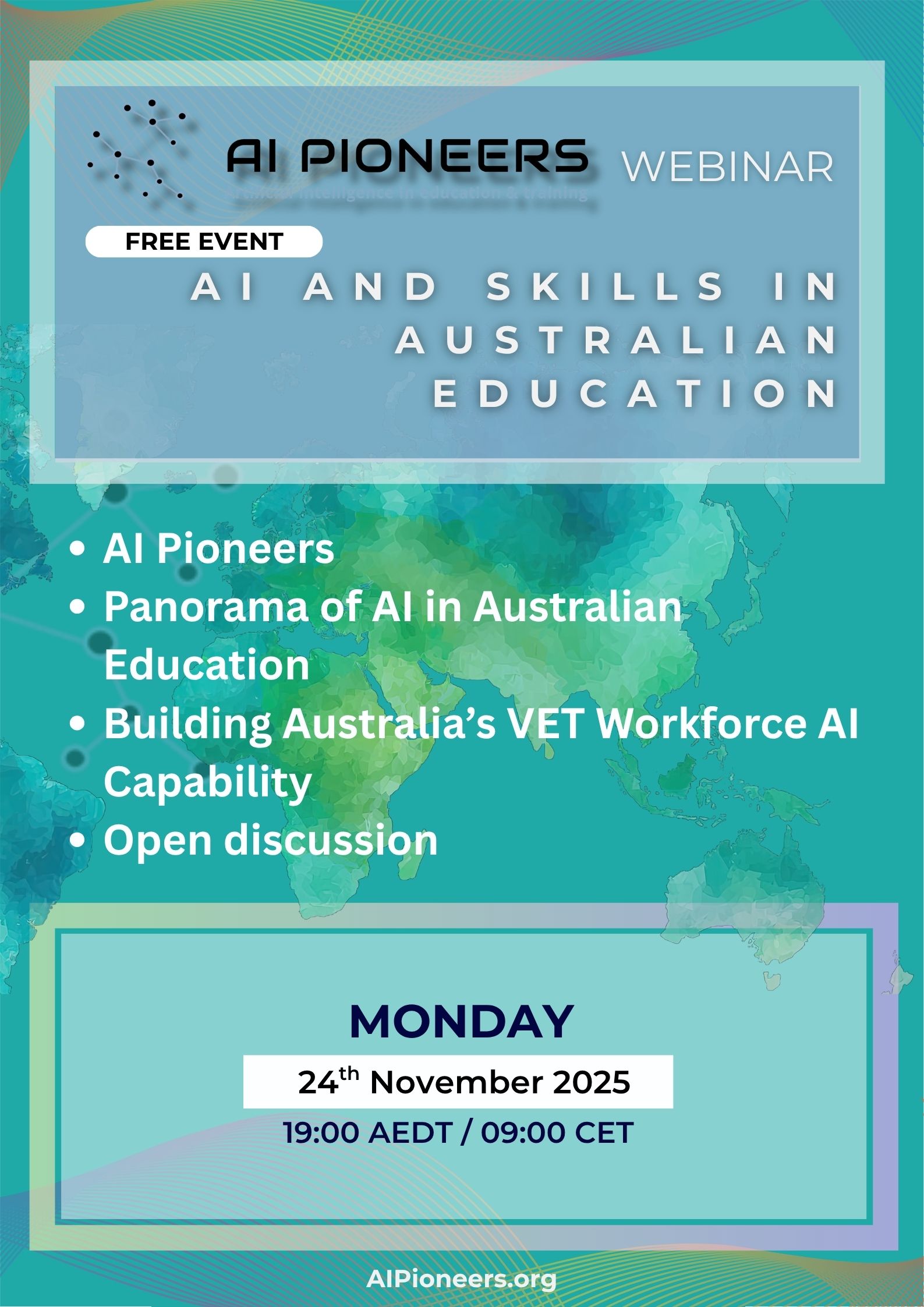

Recording now available for our webinar on AI and Skills in Australia

Our network members in Australia present their work and […]

Digital Literacies Network

I am very happy to see the launch of the Digital Literacies Network, designed to Digital Literacies Network designed to empower Individuals and Communities and launched by my long time friend Cristina Costa, Associate Professor at Durham University in the UK and her colleague Michaela Oliver. The Digital Literacies Network, they say, “is dedicated to empowering digital citizens through collaborative learning and creative practice in digital culture. We believe that digital literacy is not just about technical skills, but about cultural knowledge, identity, voice, and participation in a diverse, interconnected world. We strive to: • Co-produce knowledge: working with young […]

On bursting bubbles, AI shopping and AI slop

Its a shorter article this week as I am off to Lueven in Belgium for the kick off meeting of a new project for schools in Europe, AI Ready. I’ll report on that meeting next week. But as I write, the news is buzzing rumours about a market correction, or put more vividly a burst bubble, in the value of AI companies. Its been some time coming. Market prices of companies like OpenAI, Microsoft, Anthropic and of course Nvidia have soared in recent months. Vast sums have been promised for developing the AI infrastructure with limited. justification. Indeed much of […]

Resources for migrant education

The AI Cookbook Inventory of Best Practices and Tools […]

AI Pioneers Final Conference Book is published 🥳

The AI Pioneers project team is delighted to announce […]