AI Degrees: A Gold Rush for Skills or a Curriculum problem?

The rapid ascent of Artificial Intelligence from a niche technical field to a pervasive societal force has triggered a predictable response from higher education. Across the UK and Europe, universities are scrambling to offer programmes in AI, responding to soaring student demand and the promise of a lucrative job market. Data from the UK’s Higher Education Statistics Agency (Hesa) reveals a dramatic surge, with the number of students enrolled in AI courses trebling since 2019-20 to reach 10,825 in the 2024-25 academic year [1]. This represents a 19 per cent increase in a single year, making it one of the […]

The kids aren’t alright

In a recent Substack post, Jared Cooney Horvath unpacks […]

Why should young people pay for the sins of the big tech bros

Cristina Costa has published an excellent article on the Digital Literacies Network website: Misplaced accountability: Why should young people be made to pay for the problems Big Tech create? With the growing spread of proposed measures to ban young people under the age of 16 from social media, she asks what underpins these recomendations that claim to safeguard young people’s well being. And do the bans really guarantee digital welfare? A recent Guardian newspaper follow up on earlier interviews with young people in Australia, which was the first country to introduce such a ban and draws doubt to its effectiveness […]

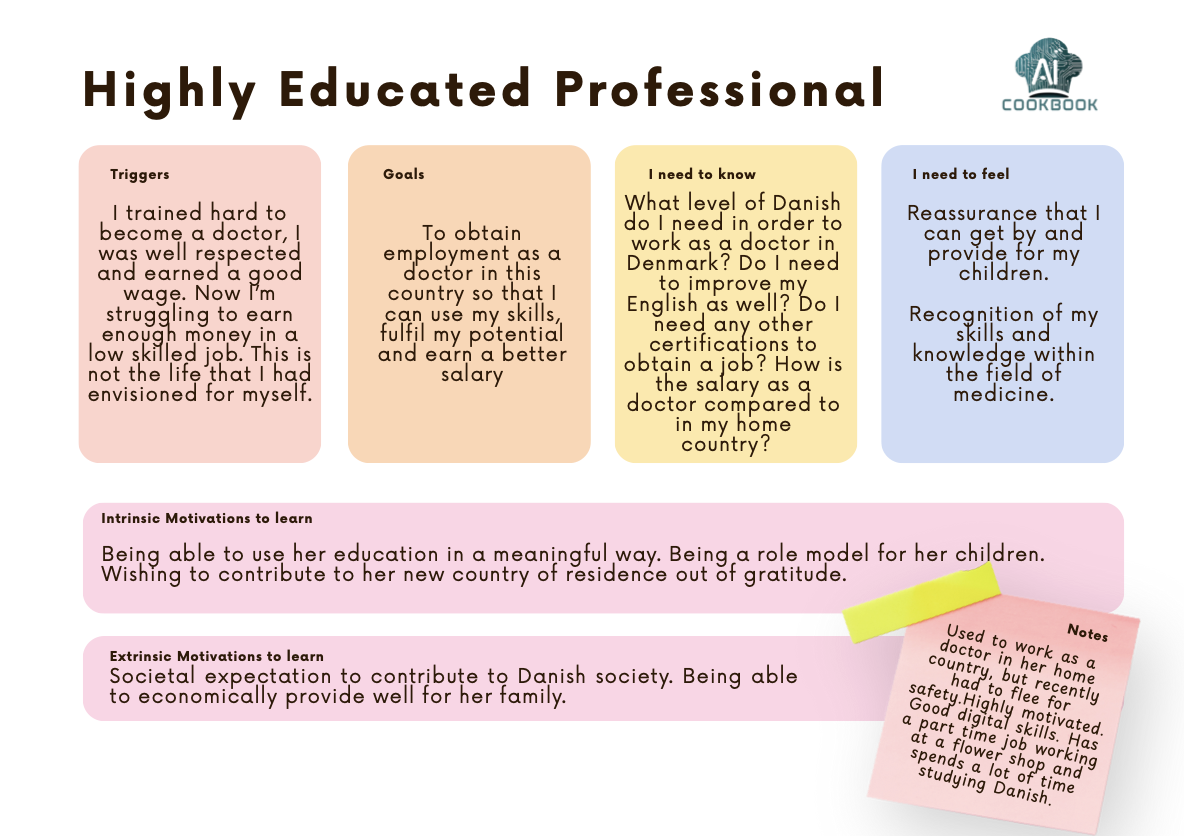

A Persona-Driven Approach to Skills for Migrant Learners

This week the AI Cookbook team met in Athens […]

The AI Employment Paradox: Navigating the Great Divide in European Workplaces

One of the most persistent themes in discussions around Artificial Intelligence is its impact on employment. This area is fraught with contradictions, fueling a confusing public discourse that oscillates between utopian promises of enhanced productivity and dystopian fears of mass unemployment. Many workers, policymakers, and educators are understandably worried. The recent release of initial findings from the European Centre for the Development of Vocational Training (Cedefop)’s 2024 AI skills survey provides a crucial, data-driven lens through which to examine these concerns, revealing a complex and uneven landscape across Europe [1]. When combined with other recent European studies, the data suggests […]

The Authenticity Crisis: AI and Assessment in Vocational Education and Training

Although the discussion has become quieter, the concerns over AI and assessment are still there. While much of the debate has centred on academic integrity in schools and universities, the unique landscape of Vocational Education and Training (VET) presents a different, and arguably more complex, set of challenges and opportunities. For VET, a field fundamentally concerned with the development of real-world competence, the rise of AI in assessment is not just a technical question; it is a direct challenge to the very meaning of authenticity in learning and practice. Arguably, the core purpose of vocational assessment has always been to […]

Beyond 16 Percent: Are We Forgetting How Learning Works?

Professor Rose Luckin is Professor of Learner-Centred Design at the UCL Knowledge Lab in London with over three decades of experience working with AI in education. Luckin has become an advisor to policymakers and educators worldwide, consistently urging a focus on how humans actually learn, rather than what technology can easily do. A recent reflection in LinkedIn is particularly relevant for vocational education and training, challenging us to look past the marketing and consider the real nature of learning. The conversation often starts with a familiar story, one Luckin recounts of a parent asking if they should invest in a […]

Navigating the AI Wave: What does OECD’s 2026 Digital Outlook Report say for VET

The constant current of conversations around Artificial Intelligence in Education can often feel turbulent, with ever more utopian promise from the big AI companies but offset by fears from AI. Critics in the education research community. It is into this environment that the OECD has released its Digital Education Outlook 2026, a report that aims to ground the discussion in evidence and careful analysis. While the report covers the entire educational landscape, its findings carry implications for the vocational education and training (VET) community across Europe. This post seeks to explore what this comprehensive outlook means for teachers, trainers, researchers, […]

How do you know if you are AI ready?

The AI Ready Schools Erasmus+ project is currently undertaking a literature review focusing on themes of AI Readiness. Here’s are some preliminary findings; An AI-ready school appears to be characterised by a comprehensive mixture of institutional policy, teacher preparation, and a commitment to human agency and ethics. It moves beyond tool adoption to foster an environment where technology serves as a “thinking partner” while maintaining the “human in the loop”. 1. Institutional and Policy Frameworks 2. Teacher Readiness and Pedagogical Shifts 3. Curricular Integration and Literacy 4. Student Agency and Critical Thinking 5. Inclusive and Social Practices Follow the AI Ready project […]

The Ladder, the Economy, and AI: A Deepening Debate for VET

Earlier this week, I wrote about the idea that Artificial Intelligence might be removing the first rung of the career ladder for young graduates. The piece was a reflection on the work of Alberto Romero, who argues that the automation of routine, entry-level tasks is creating a long-term talent pipeline crisis. It is a narrative that is worrying many of us in education and training as we are fundamentally in the business of helping people get onto that very ladder. However, the conversation around AI is never simple, and a counter-argument has emerged that challenges this narrative. In a recent […]