72% of the UK public say that laws and regulation would increase their comfort with AI

In all the debates about AI in education one factor seems fairly consistent – despite the pleas from the big AI providers – Open AI, Anthropic and the rest of them that it will hinder innovation – there appears a consensus that we need (better) regulation. In this the EU as well as national governments seems to be playing a leading role, although it can be questioned how effective the regulation is? The speed of development of Generative AI may mean that regulations have to be revised at a faster level than is normal.

Anyway, new research from the Alan Turing Institute and the Ada Lovelace Institute in the UK suggests that it is not only educationalists wanting regulations.

The research explores the public’s awareness and perception of different uses of AI, their experiences of harm and their expectations in relation to governance, regulation and the role of AI in decision-making. 72% of the UK public say that laws and regulation would increase their comfort with AI, up from 62% in 2023.

Key findings include:

- Public awareness of different AI uses varies widely e.g. it is high for driverless cars (93%) and low for benefits assessments (18%).

- Half of the UK public (50%) do not feel represented in decision-making around AI.

- Two thirds of the public (67%) have encountered AI-related harms, with false information, financial fraud and deepfakes being the most common.

- Ethnic minorities are more concerned about facial recognition in policing compared to the general population.

The survey also shows that exposure to harms from AI is widespread. Two thirds of the public (67%) reported they have encountered some form of AI-related harm at least a few times, with false information (61%), financial fraud (58%) and deepfakes (58%) being the most common. Professor Helen Margetts, Programme Director for Public Policy at the Alan Turing Institute, said: “To realise the many opportunities and benefits of AI, it will be important to build consideration of public views and experiences into decision-making about AI. These findings suggest the importance of government’s promise in the AI Action Plan to fund regulators to scale up their AI capabilities and expertise, which should foster public trust.

You can read the full report here.

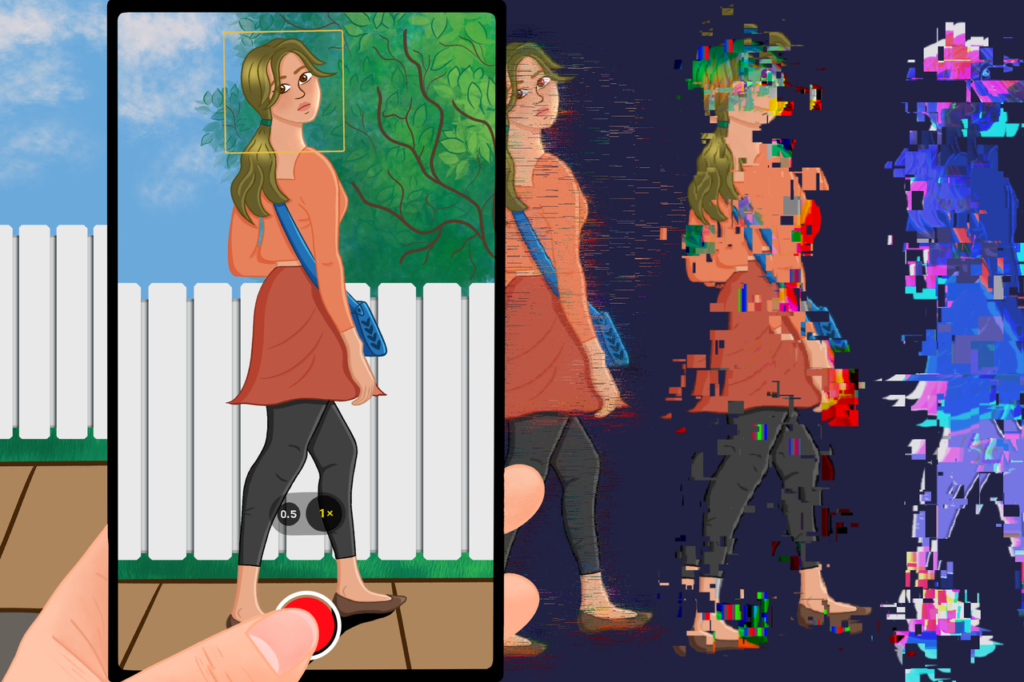

About the image

This image focuses on data manipulation in cases where individuals are filmed and their videos are shared without their consent. When such videos enter the online world, personal data becomes significantly susceptible to manipulations that further infringe on privacy rights. For instance, this data could be subjected to algorithmic analysis to predict its potential virality, incorporated into datasets for AI training purposes, or heavily edited using AI tools. The glitched figures are used to illustrate how an image of a person can be altered to the point where its origin is no longer traceable. This leads to a lack of transparency and deprives right-holders of control over their data across digital spaces.